Elements of AI growth

AI needs data, data storage capacity and data processing capacity, to grow and develop. A new question requests an immediate answer. How can the quality and veracity of this data be checked and "chosen" in a self-learning process?

As for the volume of data, the European Parliament's 2023 paper "Artificial Intelligence Threats and Opportunities" estimates that in 2025 the volume of data produced in the world could be 175 Zettabytes (ZB), i.e. 1021bytes, 175 times more than in 2010. For comparison, a very good laptop has a hard disk of 1 Terabyte (TB), or 1012 bytes. It is estimated that in the next 25 years, two other units of measurement will be exhausted: Ronnabyte (RB) equals 1027 bytes and Quettabyte (QB) equals 1030 bytes. Where does this huge volume of data come from? From all of us, everywhere!

Three main factors have been identified for data growth:

- The Internet and access to it via smart phones. Every device record and transmits information related to places, activities, preoccupations, language, images, etc. which it continuously collects while active and transmits while connected to other devices

- Social networks generate an enormous volume of data daily through messages, audio and video posts

- New sensor-based assistance and surveillance technologies for essential life support systems and infrastructure (transport, energy, etc.).

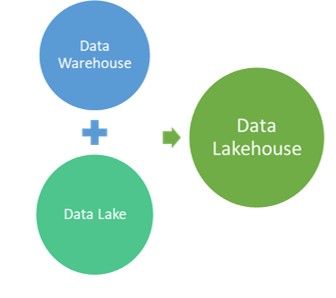

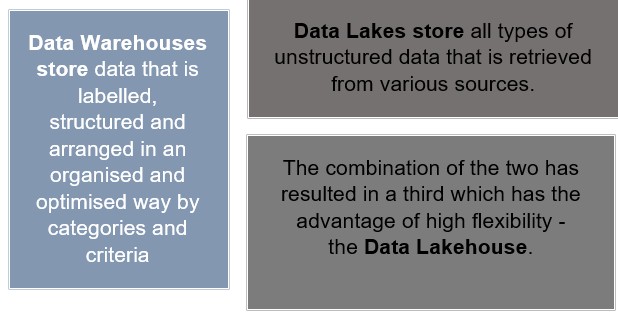

This enormous amount of data must be stored and managed by huge servers whose carbon footprint humiliates the most polluting traditional industry. Data can be stored in data warehouses or data lakes.

Figure 1 Data storage possibilities

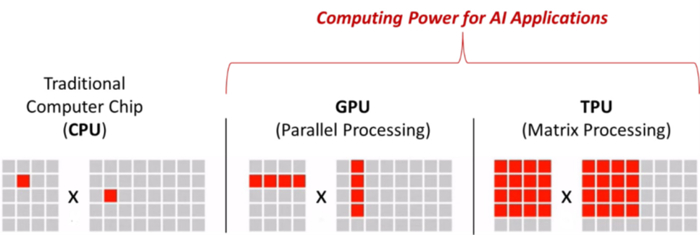

Data processing capacity is given by computing power. Gordon Moore, co-founder of Intel, noted that the number of transistors on an integrated circuit doubles once every two years. Thus, following this "Moore's Law", since the 1960s, the power of chips has steadily increased while their size has decreased.

NVIDIA launched the Graphics Processing Unit (GPU) in 1990, which, when introduced into a computer, increases its power. NVIDIA's chips caught on immediately, initially used for video games, they became essential for AI development. While traditional chips process tasks in series (one after the other), the GPU allows tasks to be processed in parallel, which means a much larger volume of information is processed.

Figure 2 CPU vs GPU vs TPU (WTTC, 2024)

In 2016, Google developed a new chip capable of performing "matrix calculations" called the Tensor Processing Unit (TPU) (https://cloud.google.com/). The processing power is staggering. For example, TPU v5 can perform about 400 trillion calculations per second.

When several chips are interconnected, a supercomputer is created, such as the one developed by Microsoft in 2020 and used to train ChatGPT. This supercomputer uses 10000 chips and cost millions of dollars (Microsoft Announces New Supercomputer, lays out Vision for Future AI Work).

Bibliography

Bridle, J. (2023). The stupidity of AI. The Guardian, https://www.theguardian.com/technology/2023/mar/16/the-stupidity-of-ai-artificial-intelligence-dall-e-chatgpt.

https://cloud.google.com/. (n.d.). Retrieved from https://cloud.google.com/tpu.

https://news.microsoft.com/source/features/ai/openai-azure-supercomputer/. (n.d.).

Huawei Technologies Co., Ltd. (2023). Artificial Intelligence Technology. Beijing, China: Springer Nature Singapore Pte Ltd. Doi: https://doi.org/10.1007/978-981-19-2879-6

WTTC. (2024). Introduction to Artificial Intelligence (AI) Technology. Guide for travel & tourism leaders. UK.